Memes Classification Project

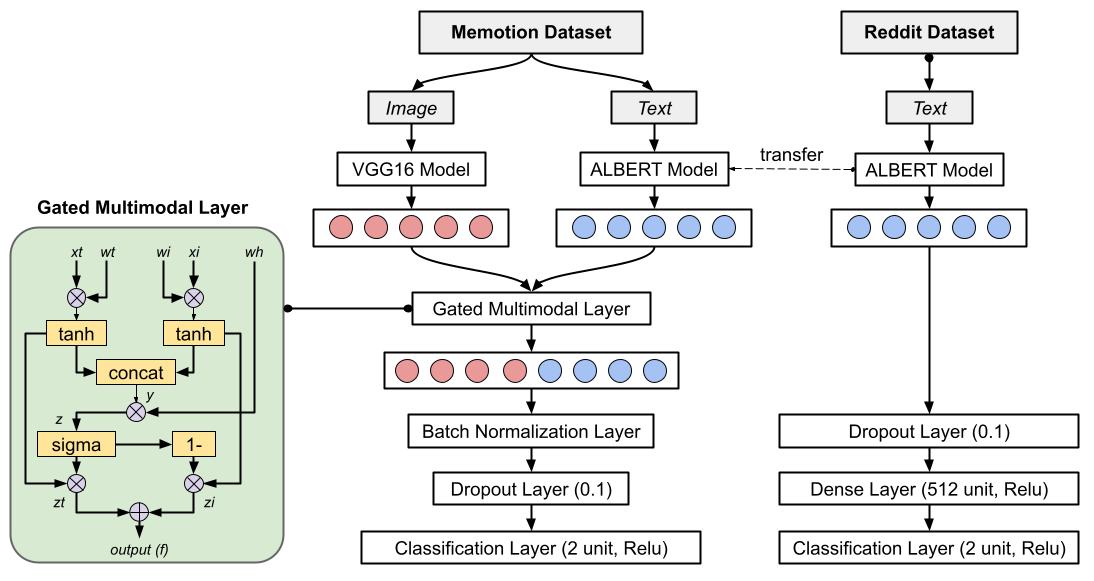

Meme analysis has become the essential research topic. It contains both graphic representation and embedded text description, and often carries more than one message spreading emotional influences on every single individual who read that meme. In this project, I propose the Memotion Multimodal Model (M4 Model) for humor detection on memotion dataset. [Codes]

Details