Near miss (Before)

Class Imbalance Study

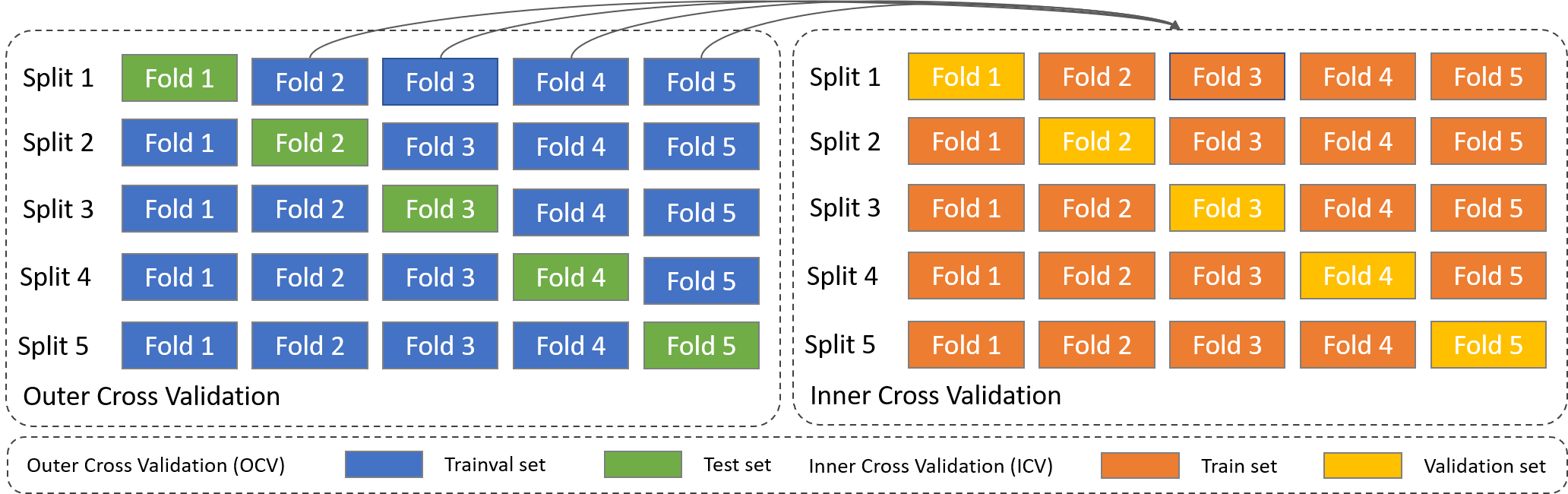

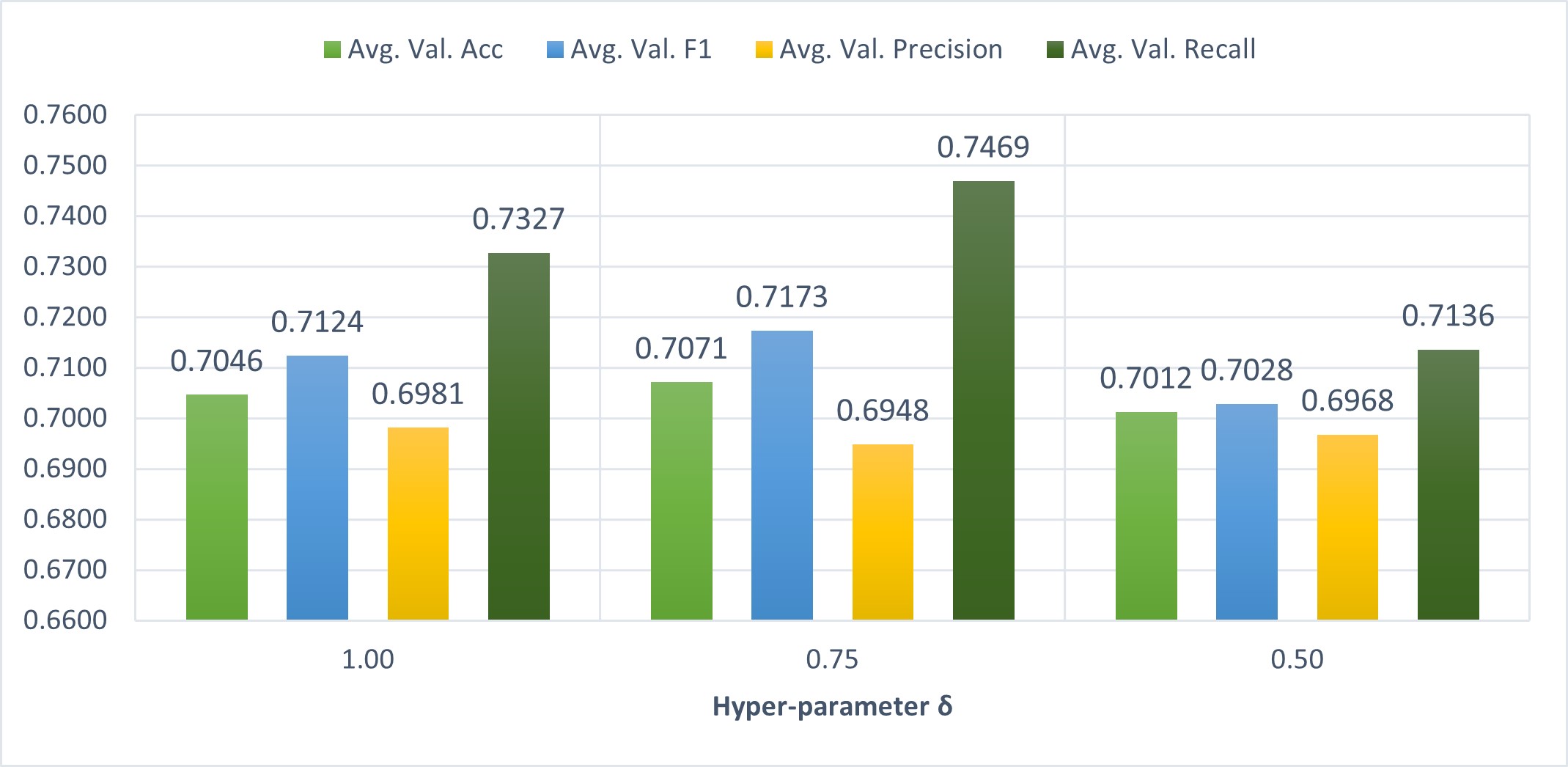

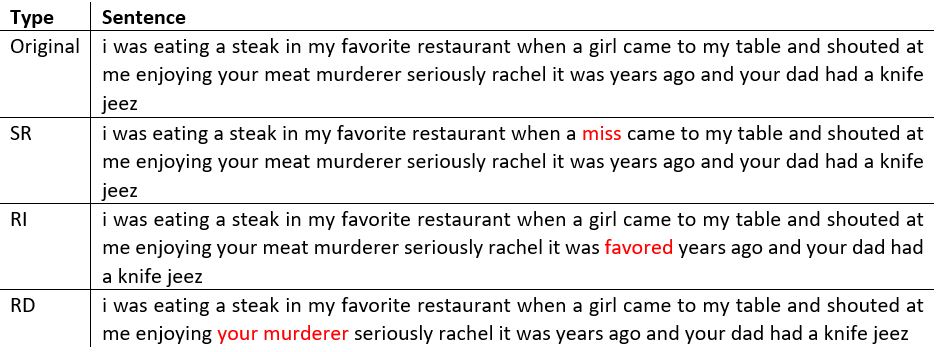

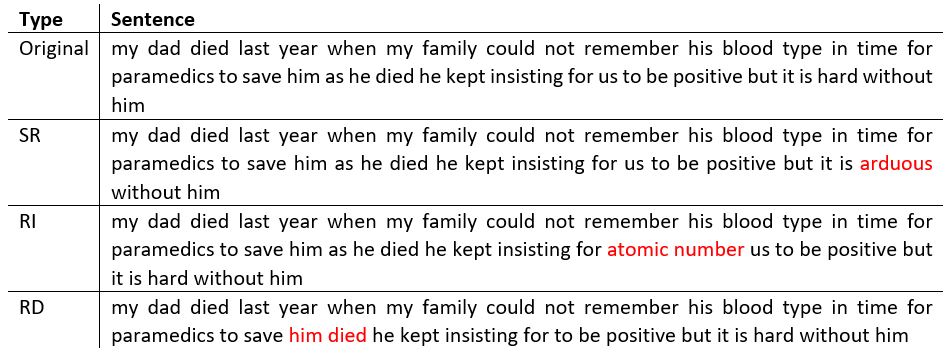

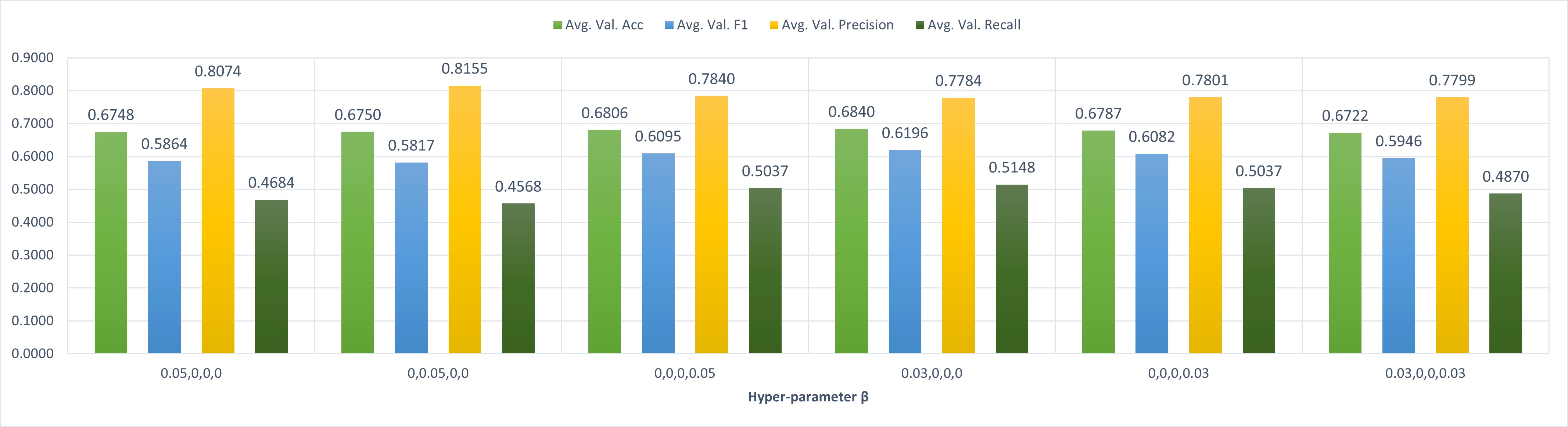

This project is to alleviate the class imbalance problem on the text classification dataset by employing sampling methods such as Resampling, Near Miss, and EDA. We systemically conduct extensive experiments using a nested-cross-validation to compare classification performances under three sampling settings. [Codes]

Details

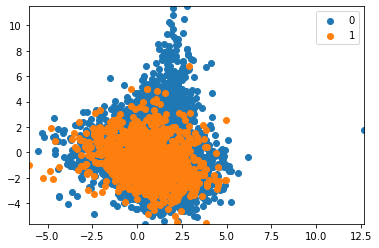

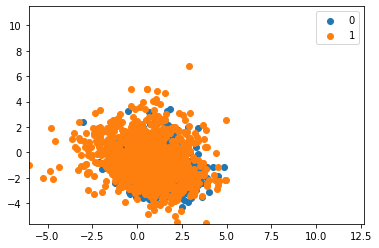

![Near miss [2]](assets/img/Project/Project6_Class_Imbalance/project6_nearmiss.png)

![Oversampling [2]](assets/img/Project/Project6_Class_Imbalance/project6_oversampling.png)

![Undersampling [2]](assets/img/Project/Project6_Class_Imbalance/project6_undersampling.png)